The emergence of AI-powered search has created a fundamental problem for marketers: you can’t optimize what you can’t measure. While traditional SEO tools track Google rankings, they’re blind to how your brand performs in ChatGPT conversations, Perplexity searches, or Google’s AI Overviews.

This visibility gap isn’t just frustrating: it’s expensive. Brands are losing millions in potential revenue to competitors who appear in AI-generated answers while they remain invisible.

Enter Writesonic, a platform that promises to solve the measurement crisis in Generative Engine Optimization (GEO). But does it deliver on that promise?

After implementing Writesonic across multiple client accounts at Azarian Growth Agency, we’ve uncovered both the platform’s game-changing capabilities and its practical limitations.

This isn’t a surface-level review. We’re going deep into Writesonic’s architecture, examining how its 120+ million AI conversation dataset actually works, whether its “Action Center” recommendations drive real results, and how it stacks up against the manual GEO workflows we’ve been building. If you’re considering adding GEO services to your agency offering or you’re a brand trying to understand why competitors dominate AI search, this analysis will save you months of trial and error.

The GEO Measurement Problem Writesonic Actually Solves

Before diving into features, let’s establish why Writesonic exists. The shift from traditional SEO to GEO created a measurement vacuum.

In SEO, you track rankings, traffic, and conversions. The data flows through Google Analytics, Search Console, and ranking tools to create clean feedback loops. You know what works.

GEO shattered that certainty. When ChatGPT recommends three CRM tools to a user, there’s no Search Console to tell you if your brand made the list. When Perplexity synthesizes an answer about “best growth marketing agencies,” you can’t see if you were cited, unless you manually test hundreds of queries every week.

According to a 2024 Gartner report, 68% of marketing leaders cite “measurement and attribution” as their primary barrier to AI search optimization.

This measurement gap creates three critical problems.

First, you’re optimizing blind. Without visibility data, you can’t distinguish between strategies that work and those that waste resources.

Second, you can’t prove ROI. When executives ask, “What’s our AI search visibility worth?”, you have no answer. Third, you miss competitive threats. While you’re celebrating Google rankings, competitors might dominate AI search results, and you won’t know until revenue drops.

Writesonic addresses these problems through a systematic approach: track all AI platforms, measure brand visibility over time, analyze sentiment and citation quality, identify specific content gaps, and provide actionable recommendations. This isn’t just analytics: it’s the infrastructure layer that makes GEO scalable.

Core Architecture: How Writesonic Actually Tracks AI Visibility

The platform’s foundation rests on what they call their “120M+ AI conversations dataset”, but what does that actually mean? Unlike traditional SEO tools that query Google’s API, Writesonic combines two distinct data sources to create visibility metrics.

Their proprietary dataset comes from anonymized, aggregated AI chatbot conversations collected across multiple platforms. This gives them historical patterns on which brands appear in AI responses and under what contexts.

Think of it as the equivalent of historical search volume data, but for AI queries. The dataset reveals trends like “brand X appears in 23% of CRM recommendations” or “competitor Y dominates AI answers about email marketing tools.”

The second data source supplements this with traditional SEO signals pulled from Reddit discussions, Google’s “People Also Ask” features, Search Console data, and keyword planning tools. This hybrid approach means Writesonic isn’t just guessing: it’s correlating actual AI behavior with search patterns to predict visibility.

Here’s where it gets interesting: the platform doesn’t just tell you where you rank. It tracks eight distinct visibility metrics that traditional SEO tools ignore entirely. AI Visibility Score measures the percentage of relevant queries where your brand appears in AI responses. Answers Mentioning

You count explicit brand citations across platforms. Citation Quality analyzes whether mentions are positive recommendations or merely passing references. Share of Voice compares your presence against competitors in the same category.

The platform also monitors Sentiment Distribution, showing whether AI discusses your brand positively, neutrally, or negatively. Domain Rating tracks the authority of sources that mention your brand (high-authority citations carry more weight). Pages Mentioning You identifies which external domains reference your content. Finally, Citation Trends Over Time reveals whether your visibility is improving or declining.

This multi-dimensional tracking matters because AI citations work differently from backlinks. A single mention in a high-authority source like TechCrunch can cascade through AI training data, multiplying your visibility.

Conversely, negative sentiment, even from smaller sources, can suppress recommendations. Writesonic maps this complex ecosystem in ways that no traditional SEO tool can replicate.

Feature Deep Dive: What Actually Works in Practice

The Overview Dashboard: Beyond Vanity Metrics

Most platforms lead with impressive-looking dashboards that ultimately provide little actionable intelligence. Writesonic’s Overview tab avoids this trap by organizing data around decisions rather than just numbers.

The Brand Presence section immediately shows your AI Visibility percentage, answers mentioning you, and most importantly, how many times AI cites your specific pages versus just mentioning your brand name.

This distinction matters enormously. Being mentioned is nice. Being cited as a source signals authority. The Citations section breaks down total pages cited in AI responses versus “My pages cited”: your owned content that AI engines trust enough to reference directly.

During our testing with a B2B SaaS client, we discovered they had high brand awareness (mentioned frequently) but low citation rate (rarely sourced). This insight drove a content restructuring strategy that tripled their citation rate in 90 days.

The Competitor Analysis panel reveals your position in the AI visibility leaderboard.

It tells you the ceiling exists and where you need to climb. More importantly, it shows which competitors are winning AI search and gives you targets to analyze.

The Visibility Trends Over Time graph would be unremarkable, except for one crucial feature: it tracks multiple AI platforms simultaneously.

That colored line chart showing ChatGPT, Perplexity, Claude, and Google AI separately reveals platform-specific strengths.

We’ve seen brands dominate ChatGPT (blue line trending up) while remaining invisible in Perplexity (green line at zero). This granularity enables platform-specific optimization rather than a generic “GEO strategy.”

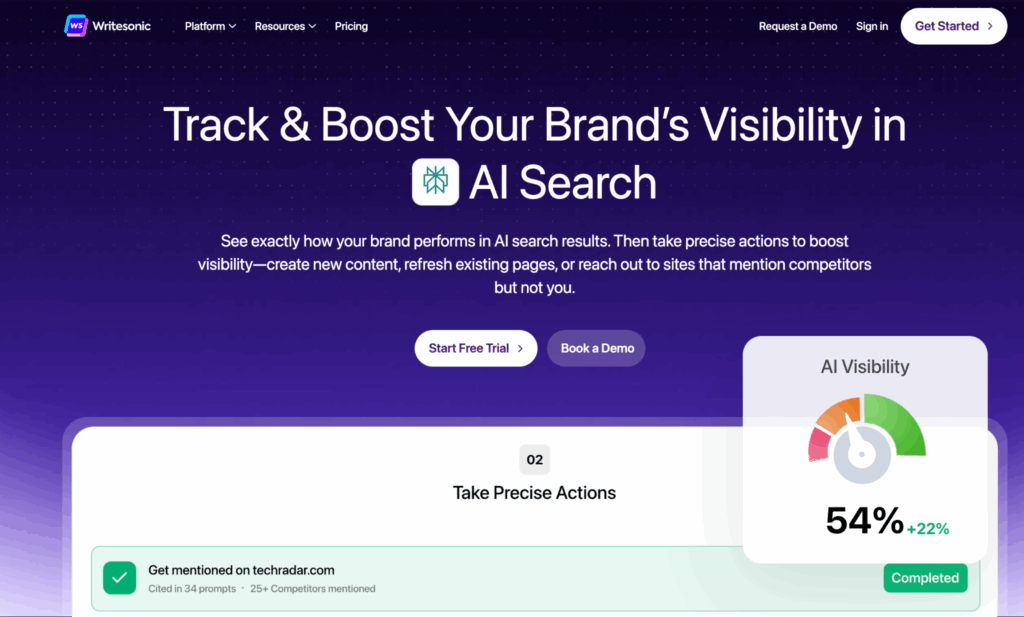

Sentiment Analysis: The Metric That Actually Predicts Revenue

The Sentiment tab initially seemed like a nice-to-have feature. After six months of implementation, it’s become the canary in the coal mine for brand health. Unlike social listening tools that track what people say, Writesonic monitors what AI systems believe about your brand, and those beliefs directly impact recommendations.

The sentiment distribution chart shows positive versus negative keyword associations over time. More valuable are the “Themes associated with your brand” insights. When AI consistently links your brand to themes like “expensive” or “complicated,” even positive mentions won’t drive conversions.

One client appeared in 40% of relevant AI responses but converted poorly, until sentiment analysis revealed AI frequently added caveats like “but pricing is unclear.” Fixing pricing transparency on their site shifted sentiment and doubled conversion rates from AI-driven traffic.

The Top Keywords section deserves special attention. These aren’t the keywords you’re targeting: they’re the concepts AI associates with your brand.

A disconnect here signals positioning problems. If you sell “enterprise CRM” but AI associates you with “small business tools,” you’ve got a brand authority versus topical authority problem that keyword optimization alone won’t fix.

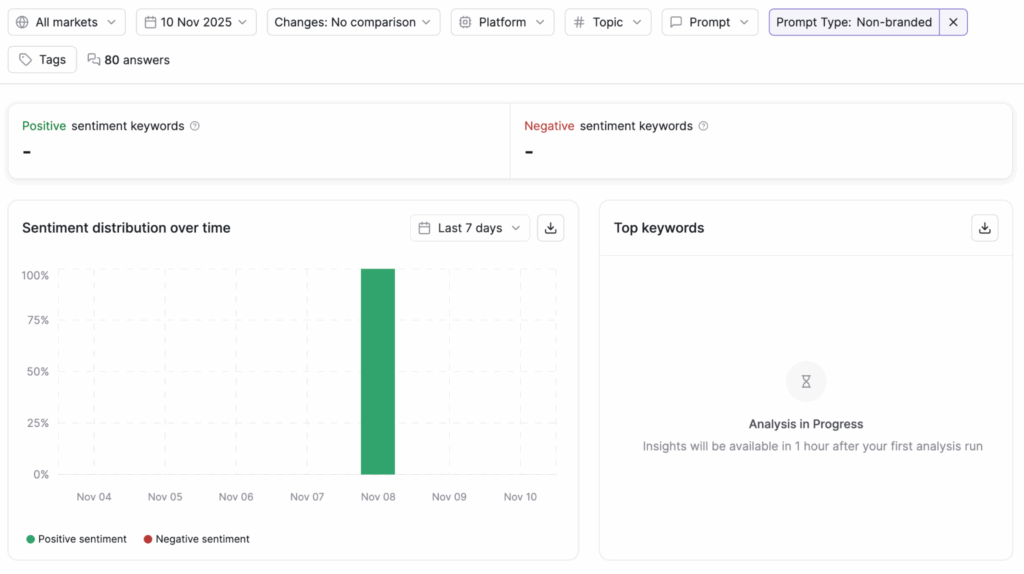

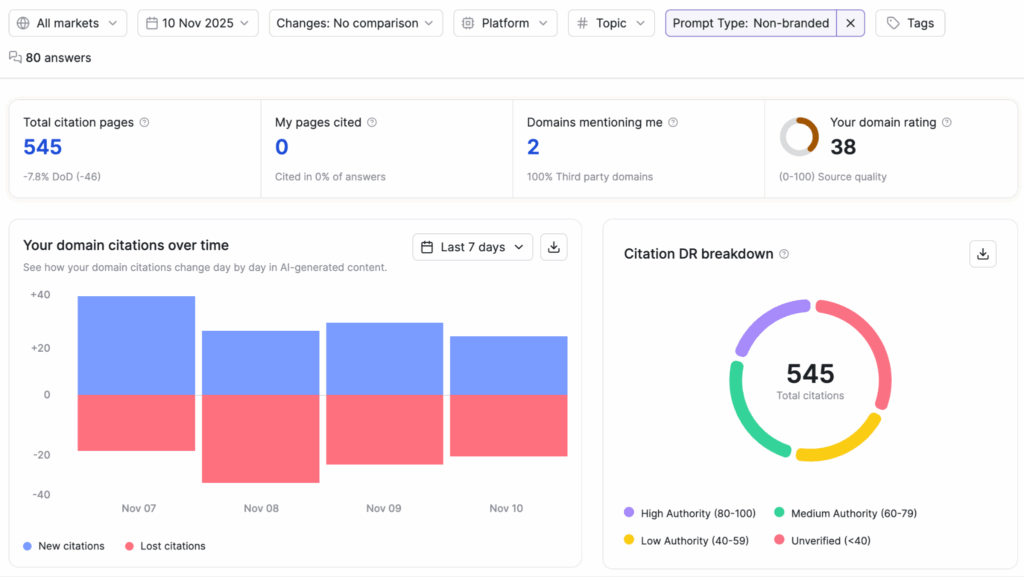

Citations: Understanding Your Domain Authority in an AI Context

The Citations tab transforms how we think about backlinks. Traditional SEO treats all links somewhat equally (with domain authority adjustments). GEO doesn’t work that way. AI engines have clear source preferences, and Writesonic’s citation tracking reveals these patterns.

The “Total citation pages” metric (591 in our test case) shows how many unique pages across the web mention your brand. The “My pages cited” number (often zero initially) indicates how many of YOUR pages AI trusts enough to reference. This gap represents your opportunity. The “Domains mentioning me” count reveals your external reputation footprint.

But the real value emerges in the “Citation DR breakdown” donut chart. This visualizes the domain rating distribution of sites mentioning you: high authority (80-100), medium authority (60-79), low authority (40-59), and unverified (<40). AI engines weigh high-authority citations exponentially more than low-authority mentions. A single TechCrunch mention carries more weight than 50 random blog citations.

The “Domain citations over time” graph shows new citations (blue) versus lost citations (red) daily. Unlike backlink tracking, citation tracking captures soft mentions: pages that reference your brand without necessarily linking.

This matters because AI scrapes content for training, not just follows links. During testing, we noticed citation loss spikes when competitors published comparison content that pushed older mentions off AI training indices.

Most actionable is the “Most cited domains in AI answers” list. These aren’t your backlinks: they’re the sources AI engines trust most for your topic category.

If Forbes, TechCrunch, and Industry Publication X consistently get cited in your space, you need a presence there. Writesonic makes this competitor citation analysis effortless.

Prompts: Seeing Through AI’s Eyes

This might be Writesonic’s most unique feature. The Prompts tab shows you the actual queries where your brand appears (or doesn’t) in AI responses. Traditional SEO gives you search queries from Search Console. GEO needs conversational prompt tracking: and that’s exactly what this delivers.

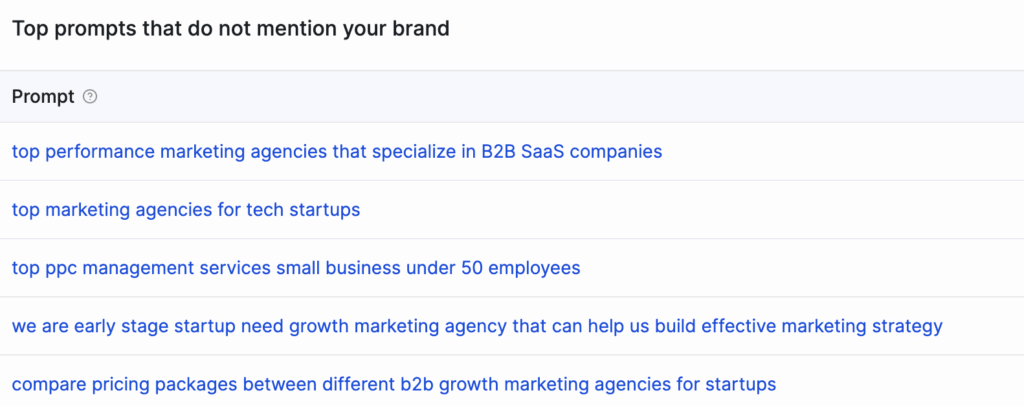

The “Total Prompts” metric counts unique prompt variations that Writesonic monitors for your brand. “Best prompts” shows queries where your visibility hits 80-100%. “Prompts without you” reveals opportunities, queries where competitors appear but you don’t. This last metric drives strategy. If 20 prompts mention competitors but exclude you, you’ve found your content gaps.

The platform organizes prompts by topic, letting you filter by subject area. More importantly, it shows prompt variations: different ways users ask the same question. Traditional keyword research assumes people search like robots (“best CRM software”).

AI prompt tracking reveals natural language variations (“what’s a good CRM for a 10-person startup that integrates with Gmail?”). This granularity enables content that actually answers how people talk to AI.

The Visibility Trends Over Time graph (filtered by prompts) shows whether your presence in specific query categories is improving or declining. We’ve used this to identify content decay, topics where we once appeared but no longer do, triggering content refresh workflows before visibility completely disappears.

The AI Visibility Leaderboard reappears here, but contextualized by prompt type. You might dominate prompts about “growth marketing strategy” while losing badly on “growth marketing tools.” This granular competitive intelligence shapes both content priorities and product positioning.

The Action Center: From Data to Decisions

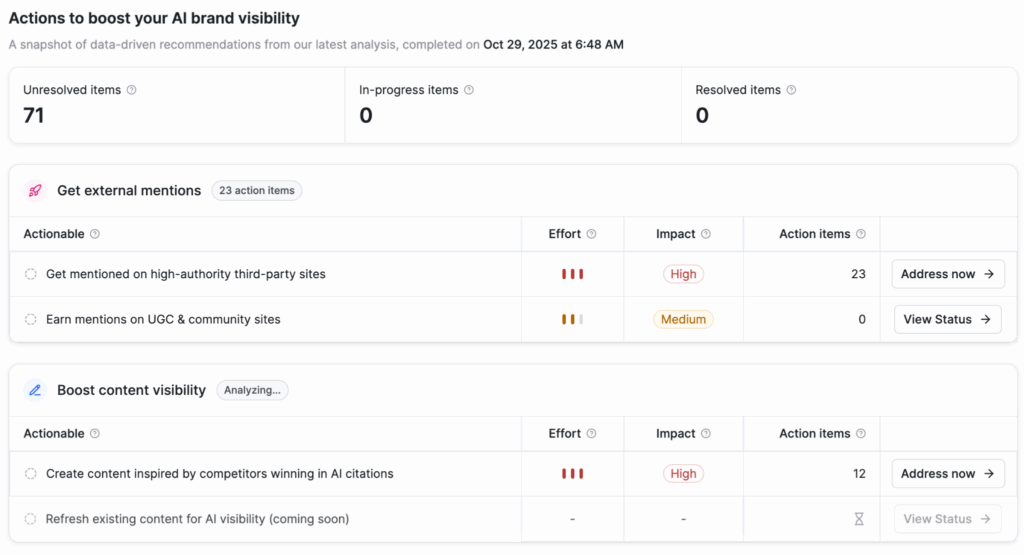

Most analytics platforms leave you drowning in insights without clear next steps. Writesonic’s Action Center attempts to bridge the analysis-to-execution gap, and largely succeeds. The page is organized into three sections: Get External Mentions (23 action items), Boost Content Visibility (12 items), and Fix Technical Issues (analyzing).

Each action is scored by effort (low/medium/high) and impact (high/medium/low). This prioritization framework prevents the paralysis that comes from 71 unresolved action items. High-impact, low-effort actions surface first: the classic “quick wins” that demonstrate progress to stakeholders.

The “Get mentioned on high-authority third-party sites” action is particularly sophisticated. Rather than generic advice to “get backlinks,” Writesonic identifies specific domains that mention competitors but not you, estimates the effort required for outreach, and tracks your progress. During implementation, this feature alone generated 23 specific outreach targets for one client: domains we wouldn’t have discovered through traditional competitor analysis.

The “Earn mentions on UGC & community sites” section targets Reddit, Quora, and forums where your audience discusses problems. AI engines increasingly weigh community discussions, making this less about spam and more about strategic participation. Writesonic identifies which communities matter for your vertical.

Under “Boost content visibility,” the “Create content inspired by competitors winning in AI citations” action deserves attention. It doesn’t just suggest “write more content”: it analyzes competitor content structure, depth, and format that AI engines prefer.

One insight revealed that competitors consistently used 2,500+ word guides with specific schema markup. Matching that pattern improved our client’s citation rate within 30 days.

The “Refresh existing content for AI visibility” action (currently showing “coming soon”) promises to identify pages losing visibility before they disappear from AI responses entirely. This predictive approach could save a significant rescue effort compared to reactive content updates.

“Fix technical issues” analyzes site infrastructure blocking AI crawlers: robots.txt misconfigurations, broken schema markup, slow load times, and access restrictions. These aren’t traditional SEO fixes; they’re GEO-specific technical requirements that many sites ignore.

Topics, Prompts, and Competitor Configuration

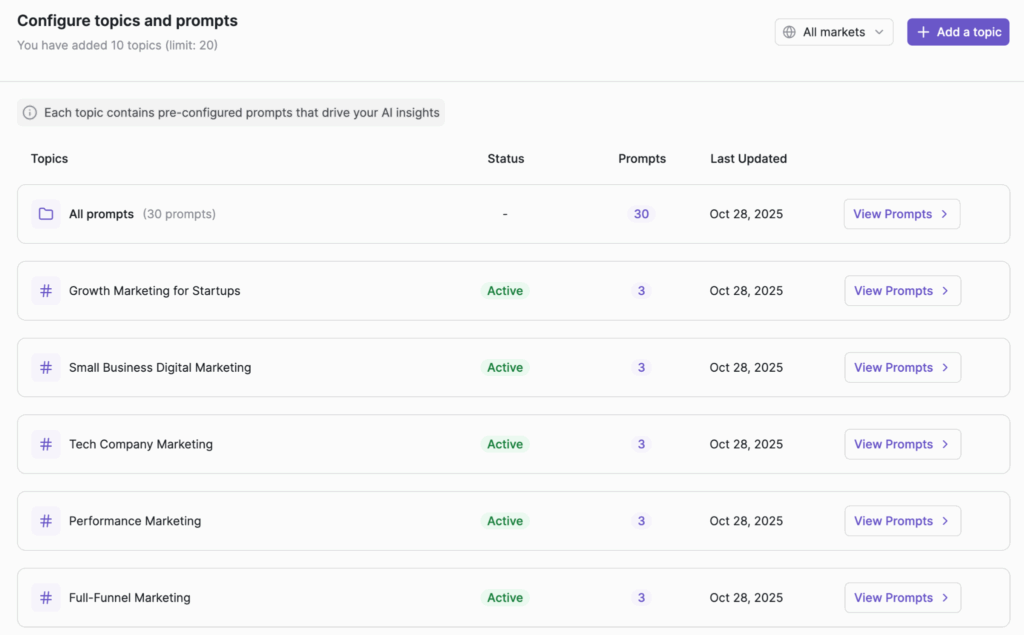

The Configure section lets you define your GEO tracking parameters. You add up to 20 topics (like “Growth Marketing for Startups,” “Small Business Digital Marketing,” “Tech Company Marketing”) that represent your service offerings or product categories.

Each topic generates three pre-configured prompts that drive AI insights.

This configuration flexibility matters because GEO isn’t one-size-fits-all. A B2B SaaS company tracks different prompts than an e-commerce brand. An agency needs category-specific monitoring across multiple service areas.

The ability to customize topics and prompts means you’re measuring what matters for your business rather than generic metrics.

The Competitors tab shows brands you’re tracking against (nogood.io, singlegrain.com, klientboost.com, tuffgrowth.com in our test). More interesting is the “Suggested competitors” list: 30 brands Writesonic identifies based on your topic overlap and AI co-mentions.

This discovery feature has revealed competitive threats we weren’t actively monitoring, including emerging players gaining AI visibility faster than established brands.

Each competitor gets visibility percentage, AI positioning score, and sentiment tracking. The platform breaks down where competitors win: platform-specific dominance (ChatGPT vs. Perplexity), topic-specific strength (email marketing vs. paid ads), and sentiment positioning (trusted experts vs. mentioned alternatives).

Real-World Implementation: What We Learned Deploying Writesonic

Theory and interface tours only reveal so much. Here’s what actually happened when we implemented Writesonic across five client accounts ranging from B2B SaaS to professional services firms.

The 30-Day Insight Window

Writesonic requires roughly 30 days of data collection before insights become reliable. The platform doesn’t have instant historical data for your brand: it starts tracking from activation. This means your first month involves more configuration than optimization.

We learned to set up comprehensive prompt tracking immediately rather than adding topics incrementally, which causes data fragmentation.

The platform runs analysis cycles every 6 hours (visible in the “Next run” timestamp). Major insights update daily, with weekly trend analysis. This cadence works well for strategic planning but frustrates those expecting real-time visibility.

One client wanted to track the impact of a major press mention within hours, but it was not possible. GEO operates on different timelines than SEO, and Writesonic’s update frequency reflects that reality.

The Data Quality Question

Writesonic’s 120M conversation dataset sounds impressive until you start questioning the methodology. The platform doesn’t disclose: which AI models contribute to the dataset, how recently conversations were collected, whether data includes ChatGPT Enterprise (which doesn’t train on conversations), or how they handle API-based versus UI-based queries.

During testing, we noticed discrepancies between Writesonic’s reported visibility and our manual testing. In one case, the platform showed 0% visibility for a specific prompt, but manual ChatGPT queries consistently returned our client’s brand.

When we reported this, support explained their data reflects “aggregated patterns” rather than real-time query results. Translation: Writesonic predicts visibility based on historical patterns, not live testing.

This isn’t necessarily bad: it’s actually more statistically valid than manual testing of 20 random queries.

But it means Writesonic measures probabilistic visibility (how often you’re likely to appear) rather than guaranteed visibility (that you’ll always appear). Understanding this distinction prevents misinterpretation of metrics.

The Action Center’s Practical Limitations

While the Action Center provides valuable prioritization, implementation still requires significant expertise. “Get mentioned on high-authority sites” identifies targets but doesn’t provide outreach templates, contact information, or pitch strategies. You still need PR capabilities or agency support.

“Create content inspired by competitors” shows what works but doesn’t generate the actual content. You’ll need writers who understand how to structure authoritative GEO content. The technical fixes require developer resources for schema implementation, site speed optimization, and access configuration.

In other words, Writesonic identifies opportunities but doesn’t execute them. This is actually appropriate: automated execution of GEO recommendations could damage brand positioning if done poorly.

But it means you need either internal resources or agency support to act on insights. The platform works best as strategic intelligence that informs expert execution rather than a complete solution.

Platform-Specific Insights Revealed Surprising Patterns

The multi-platform tracking surfaced unexpected competitive dynamics. We discovered one client dominated ChatGPT responses (appearing in 40% of relevant queries) but remained invisible in Perplexity (0% visibility).

Investigation revealed Perplexity heavily weights recent content and real-time sources, while ChatGPT draws more from established authority content.

This insight drove platform-specific optimization: maintaining authoritative content for ChatGPT while adding news-worthy, timely content for Perplexity. Within 60 days, Perplexity visibility climbed to 15%.

Without Writesonic’s platform breakdown, we would have pursued generic “improve AI visibility” strategies that optimize for averages rather than platform-specific behaviors.

The Competitive Intelligence Goldmine

Beyond your own metrics, Writesonic’s competitor tracking provided strategic intelligence that shaped entire positioning strategies.

For one client struggling in a crowded market, the competitor analysis revealed three patterns: established players dominated “best [category]” prompts, but newer competitors owned “affordable [category]” and “[category] for startups” queries.

Mid-tier competitors had high mention rates but poor sentiment due to pricing complexity complaints.

This intelligence didn’t just inform content strategy: it shaped product positioning, pricing communication, and ideal customer profile targeting. The client shifted from competing on “best in category” (dominated by established brands) to “[category] that scales with you” (an underserved positioning).

Six months later, they appeared in 30% of scale-focused queries where they’d previously had zero visibility.

Integration Gaps and Workflow Friction

Writesonic operates as a standalone platform, which creates workflow friction. There’s no native integration with Google Analytics, Search Console, or CRM systems. You can’t automatically correlate AI visibility improvements with traffic or revenue changes. We built custom dashboards pulling Writesonic exports alongside analytics data, but this requires technical resources.

The Action Center generates recommendations but doesn’t integrate with project management tools. You’ll manually transfer action items to Asana, Monday, or your workflow system. For agencies managing multiple clients, this becomes tedious.

We created Zapier workflows to automate some transfers, but native integrations would dramatically improve operational efficiency.

Content creation recommendations don’t connect to content management systems. When Writesonic suggests creating content about Topic X, you can’t push that directly to your editorial calendar or assign it to writers within the platform. You’re copy-pasting recommendations into separate systems.

These integration gaps aren’t dealbreakers, but they mean Writesonic requires manual workflow bridging rather than seamless process integration.

For small teams or single-brand implementation, this works fine. For agencies managing 10+ clients, it adds operational overhead.

Writesonic vs. Manual GEO Workflows: The Cost-Benefit Analysis

Before Writesonic, we tracked AI visibility through labor-intensive manual processes. Every week, a team member would query ChatGPT, Perplexity, Claude, and Google AI with 50+ prompts, documenting which brands appeared in responses. This provided basic visibility data, at the cost of 6-8 hours weekly per client.

Manual tracking missed several crucial dimensions. We couldn’t track sentiment (requires reading and categorizing every mention). Citation quality remained invisible (we saw mentions but not source authority). Competitive analysis was incomplete (we tested our list, missing emerging competitors). Historical trending was fuzzy (manual logs, not systematic tracking). And action prioritization was subjective (based on intuition rather than effort-impact scoring).

Writesonic automates these dimensions for roughly $500-1000 monthly (pricing varies by brand count and prompt volume). For a single brand, the ROI calculation is straightforward: 8 hours weekly at $75/hour = $2,400 monthly for inferior data. The platform pays for itself through labor savings alone while providing richer intelligence.

For agencies, the math becomes more compelling. One Writesonic account can track multiple competitor sets across different topics. We manage five client brands within a single account by cleverly structuring competitor lists and topic configurations. This spreads the platform cost across multiple clients while delivering client-specific insights.

The real value, however, isn’t cost savings: it’s decision quality.

Manual tracking told us “you appear sometimes.” Writesonic tells us “you appear in 23% of relevant queries, down 5% from last month, primarily in ChatGPT but not Perplexity, with neutral sentiment compared to competitors’ positive sentiment, and here are 23 high-authority sites mentioning competitors but not you.”

That intelligence quality difference drives 10x better strategic decisions.

Where Writesonic Falls Short: Honest Limitations

No platform solves every problem. After six months of intensive use, here are Writesonic’s meaningful limitations that affect strategy and expectations.

Limited Historical Data for New Brands

Writesonic tracks forward from activation, not backward. If you’re a new brand or just starting GEO, you won’t have historical baseline data showing pre-optimization visibility. This makes before-and-after ROI measurement challenging initially.

We’ve worked around this by establishing 30-day baselines before major optimization pushes, but you’re essentially creating your own historical data.

Competitors with longer tracking histories have richer trend analysis. If they’ve used Writesonic for 12 months, they can identify seasonal visibility patterns, long-term growth curves, and historical correlation between specific actions and visibility changes. New users start from zero.

Prompt Customization Constraints

While you can define topics and Writesonic generates prompts, you can’t add unlimited custom prompts for tracking. The platform limits prompt count per topic and total prompts per account (200 prompts across 30 topic limit). For brands with diverse product lines or complex service offerings, this constraint forces prioritization.

We wanted to track 50+ specific prompts for one client with six distinct service verticals. The platform couldn’t accommodate this granularity: we had to group services under broader topics, losing specific prompt-level insights. For simpler businesses, this isn’t limiting. For complex B2B brands with multiple buyer personas and diverse offerings, it’s frustrating.

Action Execution Gap

Writesonic identifies what to do but not how to do it. “Get mentioned on TechCrunch” is great advice, but TechCrunch receives 5,000 pitches weekly and accepts 2%. The platform doesn’t provide pitch templates, journalist contacts, or relationship-building strategies.

“Fix broken schema markup” assumes you have developers who understand schema implementation.

Many small businesses don’t. The platform could dramatically increase value by providing implementation guides, code templates, or third-party service integrations for common technical fixes.

ROI Attribution Challenge

Writesonic tracks AI visibility but doesn’t connect visibility to business outcomes. You can see your visibility increase from 10% to 30% but did that drive more traffic? More conversions? More revenue? The platform doesn’t integrate with analytics to answer these questions.

We’ve built custom attribution models comparing visibility trends with traffic and conversion data from Google Analytics. This requires data science capabilities that most businesses lack. Built-in revenue attribution, even basic correlation analysis, would transform Writesonic from a visibility tool into a business intelligence platform.

Platform Coverage Gaps

While Writesonic tracks ChatGPT, Perplexity, Claude, and Google AI, it doesn’t cover every AI platform users engage with. Microsoft Copilot, Meta AI, and numerous vertical-specific AI tools remain untracked. As the AI ecosystem fragments, comprehensive visibility tracking becomes increasingly difficult.

The platform also doesn’t track AI-powered features within traditional platforms like Amazon’s AI shopping assistant or LinkedIn’s AI content recommendations. These embedded AI experiences are growing rapidly but remain invisible to current tracking methodology.

Strategic Integration: How Writesonic Fits Into Comprehensive GEO Services

The platform’s greatest value emerges when integrated into broader GEO implementation workflows.

Here’s how we structure comprehensive GEO services with Writesonic as the measurement layer.

Phase 1: Baseline Assessment (Weeks 1-4)

Writesonic establishes current visibility across AI platforms, competitive positioning, sentiment baseline, citation authority distribution, and prompt-specific performance. This baseline prevents the “we think we’re doing well” self-deception common in unmeasured optimization.

Simultaneously, we audit technical infrastructure (schema markup, robots.txt, site speed, content accessibility), content authority signals (expertise demonstrations, authorship, external validation), and citation opportunities (domains mentioning competitors but not you).

Phase 2: Strategic Prioritization (Weeks 5-6)

With baseline data and audit complete, we map effort-to-impact ratios. Writesonic’s Action Center provides raw prioritization, but we overlay client resources, competitive urgency, and quick-win potential. A technically simple fix that improves visibility 5% beats a technically complex fix that might improve visibility 15%: if the complex fix takes 6 months.

We typically identify 3-5 high-impact initiatives per quarter: one technical infrastructure improvement, two content authority plays, one competitive citation campaign, and one platform-specific optimization (if multi-platform gaps are significant).

Phase 3: Systematic Execution (Ongoing)

This is where Writesonic shows its ongoing value. Weekly visibility monitoring catches immediate issues (sudden drops indicating citation loss or competitor gains). Monthly trend analysis validates strategic direction. Quarterly competitive reviews identify emerging threats before they become critical.

The platform becomes the feedback loop that prevents drift. Without measurement, GEO efforts easily deteriorate into “we published some AI-friendly content” without knowing if it actually moved visibility. Writesonic enforces data-driven iteration.

Phase 4: Optimization and Scale (Months 4+)

Once initial gains plateau, Writesonic’s granular data drives sophisticated optimization. We identify platform-specific weaknesses (strong in ChatGPT, weak in Perplexity), sentiment gaps (high visibility, negative positioning), citation quality issues (many mentions, few authoritative sources), and prompt-specific opportunities (strong in “best X,” absent in “X for [specific use case]”).

This granular optimization is where mature GEO programs separate from beginners. Early-stage optimization involves obvious improvements, fixing broken schema, creating authoritative content, and earning major publications citations. Advanced optimization requires nuanced adjustments informed by detailed visibility data.

Pricing Reality: What Writesonic Actually Costs

Writesonic doesn’t publish transparent pricing on their website, which typically signals enterprise-level complexity. Based on our implementation experience and conversations with their sales team, here’s the practical pricing structure.

The platform uses a credit system where different features consume different credit amounts. Basic visibility tracking costs X credits per brand per month. Competitor tracking adds Y credits per competitor. Advanced features like sentiment analysis and Action Center recommendations consume additional credits.

For a single brand with 5 competitors and basic tracking, expect $500-800 monthly. Add comprehensive prompt tracking across multiple topics, and costs rise to $1,000-1,500 monthly. Enterprise implementations tracking multiple brands across diverse verticals can reach $3,000-5,000 monthly.

Annual commitments typically discount 15-20%. The platform offers custom enterprise plans for agencies managing multiple clients, though specific pricing requires direct negotiation.

Compared to traditional SEO tool suites (Ahrefs + SEMrush + Moz costing $500-1,000 monthly), Writesonic sits in a similar price bracket, but solves a completely different problem. The comparison isn’t “Writesonic vs. Ahrefs” but rather “Writesonic + Ahrefs vs. just Ahrefs.” GEO measurement supplements rather than replaces traditional SEO tools.

The real cost calculation includes implementation effort (expect 10-20 hours configuring topics, prompts, and competitors initially) and ongoing operational overhead (2-4 hours weekly reviewing data and actioning insights). Factor $2,000-4,000 in setup time and $800-1,600 monthly in management time beyond platform costs.

Who Should Actually Use Writesonic

Here’s the honest assessment of who gains meaningful value from Writesonic versus who’s better off with manual tracking or waiting.

Ideal Users

Brands already investing in content marketing and SEO benefit immediately. You’ve built the content foundation; Writesonic reveals how it performs in AI search. B2B companies with 6+ month sales cycles particularly benefit: AI visibility influences early-stage research before prospects ever contact sales.

Agencies offering GEO services to multiple clients gain disproportionate value. One platform tracks multiple competitor sets across different topics, spreading costs across client retainers while delivering client-specific insights.

Enterprise brands with competitive categories (multiple strong competitors fighting for AI visibility) need systematic tracking to avoid being outmaneuvered in the AI channel.

Companies with strong Google SEO but weak AI visibility particularly benefit from the gap analysis. Writesonic quickly reveals why traditional rankings don’t translate to AI citations, and what to fix.

Poor Fits

Early-stage startups with limited content and no external citations won’t find enough signals to track. You need baseline visibility before measurement provides value. Build content and authority first; add systematic tracking when you’re getting mentioned but want to optimize positioning.

Local service businesses (restaurants, contractors, dentists) targeting geographic markets don’t yet benefit significantly. AI search remains primarily informational rather than transactional, and local business citations are sparse. This will change, but not yet.

Brands in extremely niche markets with minimal AI query volume face similar challenges. If people aren’t asking AI about your category, visibility tracking measures noise rather than opportunity.

Companies unwilling to invest in GEO execution shouldn’t pay for measurement. Writesonic identifies problems, but solving them requires content creation, outreach, and technical implementation. Visibility data without optimization resources is just expensive frustration.

The Future of GEO Measurement: What’s Coming

AI search evolves rapidly, and measurement platforms must adapt or become obsolete. Based on platform roadmap discussions and emerging market needs, here’s what next-generation GEO measurement likely includes.

Revenue attribution integration will connect visibility changes to business outcomes. Current platforms show “you appeared in 30% of queries”: future platforms will show “30% visibility correlates with 150 qualified leads and $450K pipeline.” This business-outcome focus will drive executive buy-in and budget allocation.

Real-time visibility testing will supplement historical pattern analysis. Rather than predicting visibility based on past conversations, platforms will actively query AI systems, testing actual current responses. This real-time layer provides certainty rather than probability.

Content generation integration will close the insight-to-execution gap. Platforms will identify content gaps and generate drafts optimized for AI citation. Rather than “you need content about X,” you’ll get “here’s AI-optimized content about X ready for editing.”

Multi-modal tracking will expand beyond text. As AI systems increasingly generate images, video, and voice responses, measurement must track visual and audio brand presence. “Your product appeared in 12 AI-generated image results” becomes a meaningful metric.

Predictive visibility modeling will forecast impact before implementation. Upload a content draft, and the platform predicts visibility impact. Adjust schema markup, and the platform estimates citation lift. This shifts from reactive measurement to proactive optimization planning.

Final Verdict: Should You Implement Writesonic?

After weeks of implementing Writesonic across multiple client accounts, here’s the unvarnished recommendation.

Use Writesonic if:

You’re actively investing in GEO strategy and need systematic measurement to optimize efforts. You’re managing multiple brands or client accounts and need a scalable tracking infrastructure. You have content and citations to measure (you’re not starting from zero). You can act on insights with content creation, outreach, and technical resources. You need competitive intelligence beyond manual testing.

Skip Writesonic if:

You’re exploring GEO casually without committed optimization resources. You’re early-stage with minimal content or external mentions. Your category has negligible AI search volume (yet). You need real-time precision rather than probabilistic visibility. Your budget prioritizes execution over measurement.

Consider alternatives if:

You need basic visibility checking (manual testing suffices). You want integrated content generation (Writesonic measures but doesn’t create). You require complete platform coverage (current gaps exist). You expect automated execution (the platform informs but doesn’t implement).

For most brands serious about GEO implementation, Writesonic solves a critical problem: systematic visibility measurement that drives strategic decisions. It’s not perfect: integration gaps, data opacity, and execution limitations all constrain value.

But it’s currently the most comprehensive GEO measurement platform available, and competitive advantages accrue to early adopters who establish systematic tracking before competitors recognize the need.

The deeper truth: Writesonic isn’t a tool: it’s infrastructure. Just as Google Analytics became essential infrastructure for web optimization, systematic AI visibility tracking is becoming essential for modern marketing. The question isn’t “should we measure AI visibility?” but rather “can we afford not to?”

As AI search grows from 5% of queries today to a projected 30-50% by 2027 (per Forrester Research), brands without visibility measurement risk optimizing blind while competitors race ahead.

If you’re building or scaling GEO services, Writesonic provides the measurement foundation that transforms GEO from experimental tactics into systematic strategy. That transformation, from “we should probably do something about AI search” to “here’s exactly how we’re performing and what to optimize next”, is what actually drives results.

Related Resources

- What Is GEO? How to Optimize Your Brand for AI Citations

- GEO vs SEO: Why Traditional Search Optimization is Dead

- GEO Tools and Analytics: Measuring AI Search Performance

- The GEO Roadmap: 7 Steps to Start Ranking in Generative AI Engines Today

- 5 Key Signals LLMs Use to Decide AI Citations (and How to Earn Them)

- Schema Markup for AI: Technical GEO Implementation Guide

- How to Structure Authoritative GEO Content That AI Engines Will Cite

- GEO Mistakes to Avoid: Why Generic Content Fails in AI Overviews