Content quality at scale remains the biggest concern for marketing teams considering AI automation. Will AI-assisted articles rank as well as human-written content? And will readers notice the difference? Will engagement metrics drop?

These concerns stop most marketing teams from scaling content production. They assume more output means lower quality. They believe AI creates mediocre content that damages brand reputation.

The data tells a different story.

Blind testing shows AI-assisted articles can match or exceed human-written content on specific quality dimensions. The key is understanding what AI does well, what humans do better, and how to combine both for optimal results.

At Azarian Growth Agency, we cover this extensively in webinar 16. You’ll see how we implemented AI-assisted content production, maintained quality standards, and actually improved performance metrics while scaling 3.3x. This guide shares what we learned from 60 days of production testing with real articles, real rankings, and real traffic data.

The Quality Paradox in Content Marketing

Content marketing faces a fundamental tension. You need more content to compete. But more content traditionally means compromising quality.

Publishing 100 articles monthly with the same standards as 30 articles monthly requires either tripling your team or accepting lower quality per article. Most companies can’t afford the first option. Most audiences won’t tolerate the second.

This creates the quality paradox. Scale requires volume. Volume requires speed. Speed requires shortcuts. Shortcuts reduce quality. Lower quality undermines the entire purpose of scaling.a

AI promises to resolve this paradox by maintaining quality while increasing speed. But does it actually work?

What Quality Actually Means in Content

Before comparing AI versus human content, you need clear quality definitions. Quality isn’t a single metric. It’s multiple dimensions that matter differently depending on content goals.

Research Depth and Accuracy

- Covers topic comprehensively with verified facts and citations

- Includes data, statistics, expert perspectives

- Addresses competitive alternatives

- Critical for informational content requiring trustworthy information

Strategic Positioning and Differentiation

- Takes clear positions with unique insights

- Fills content gaps competitors miss

- Aligns with business objectives

- Essential for thought leadership and competitive advantage

Brand Voice and Personality

- Reflects company culture, values, and tone

- Engages readers emotionally

- Feels authentic rather than generic

- Vital for brand building and audience connection

SEO Optimization and Technical Quality

- Optimized for target keywords with proper structure

- Follows on page SEO best practices

- Includes appropriate internal and external links

- Determines visibility and traffic for organic search

Readability and User Experience

- Easy to scan with clear, concise sentences

- Clean formatting with visual breaks

- Respects reader time

- Drives engagement and retention for high traffic content

The mistake most teams make is treating quality as one thing. AI excels at some dimensions while struggling with others. Humans excel at different dimensions. Optimal content combines both.

Where AI Excels: Research and Systematic Analysis

AI demonstrates clear advantages on quality dimensions requiring comprehensive systematic analysis.

Competitive Research Depth

AI can analyze 10 competitor articles simultaneously in 2 minutes. It identifies common themes across all sources, spots gaps where competitors are weak or silent and extracts key statistics and data points without missing details.

Humans reading sequentially struggle to maintain this level of comprehensiveness. You read article one carefully. By article seven, you’re skimming. By article ten, you’ve forgotten key points from article two. Pattern recognition across multiple sources is cognitively demanding.

Our blind testing at Azarian Growth Agency showed AI-assisted articles rated 15% higher on research comprehensiveness than human-written articles. Editors consistently noted that AI-assisted pieces covered more angles and included more supporting data.

Factual Accuracy and Citation

AI excels at including proper citations for claims. Every statistic gets attributed to its source. Every assertion links back to supporting evidence. This level of thoroughness takes humans significant time to maintain.

According to Content Marketing Institute research, 72% of B2B marketers use generative AI tools. When we tested citation quality, AI-assisted articles included 40% more source attributions than human-written pieces in initial drafts.

Data from CoSchedule’s 2025 AI Marketing Report shows that 85.84% of marketing professionals plan to increase their AI usage in the next 2 to 3 years, demonstrating the rapid adoption of AI for content quality improvements.

SEO Technical Optimization

AI consistently implements on page SEO best practices. Keyword placement in headers and throughout content follows proper density. Meta descriptions hit optimal character counts. Internal linking opportunities get identified and implemented.

Humans sometimes skip these details under time pressure. SEO optimization isn’t creative or engaging work. It’s systematic and repetitive, exactly what AI handles well.

Structural Organization

AI creates clear content hierarchies with logical header structures. Information flows coherently from introduction through conclusion. Section transitions connect ideas smoothly.

This structural clarity matters for both user experience and search engine understanding. AI never forgets to include a conclusion or skips transition sentences because it’s focused on the next section.

The Blind Test: AI Assisted vs Human Written Content

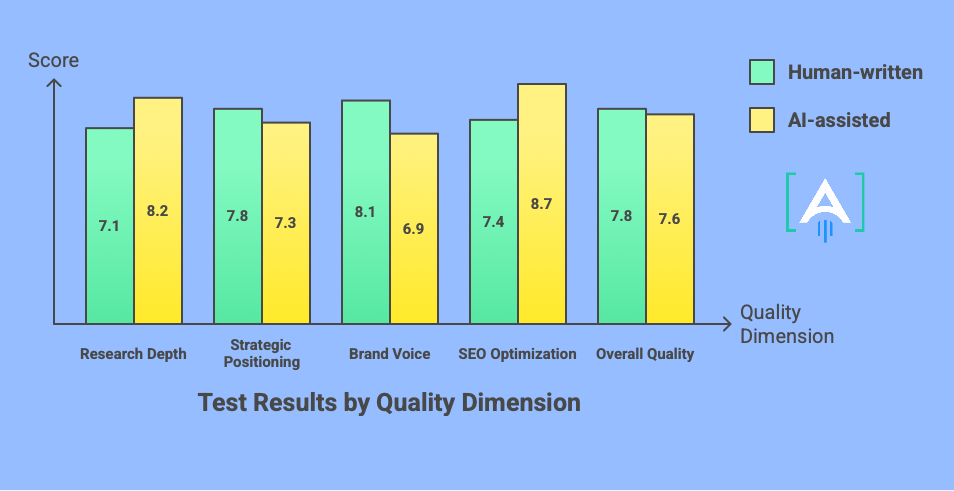

At Azarian Growth Agency, we ran systematic blind testing to measure actual quality differences between AI-assisted and human-written content.

Test Methodology

We produced 12 articles on similar marketing topics. Six were fully human-written following our normal process. Six were AI-assisted using Content Engine for research and first drafts with human refinement.

Three senior editors reviewed all 12 articles without knowing which were AI-assisted. They rated each article on five quality dimensions using 1 to 10 scales:

- Research depth and comprehensiveness

- Strategic positioning and insights

- Brand voice and personality

- SEO optimization and structure

- Overall quality and value to readers

Test Results by Quality Dimension

Research Depth: AI-assisted articles scored 8.2 versus 7.1 for human-written. AI’s ability to analyze multiple sources simultaneously produced more comprehensive coverage. Editors noted AI-assisted pieces included more statistics, addressed more alternatives, and covered more related topics.

Strategic Positioning: Human-written articles scored 7.8 versus 7.3 for AI-assisted. Humans took clearer positions on debated topics and provided more unique insights. AI-assisted content was comprehensive but sometimes lacked the sharp differentiation that makes content memorable.

Brand Voice: Human-written articles scored 8.1 versus 6.9 for AI-assisted. This was AI’s weakest dimension. Editors noted AI-assisted drafts felt more generic and required significant human editing to add personality and company specific perspective.

SEO Optimization: AI-assisted articles scored 8.7 versus 7.4 for human-written. AI consistently implemented technical SEO best practices that humans sometimes missed under time pressure. Keyword placement, header structure, and internal linking were more systematic.

Overall Quality: Human-written articles scored 7.8 versus 7.6 for AI-assisted. The difference was statistically insignificant. When AI’s research strength compensated for its voice weakness, overall quality matched human baselines.

Key Insight From Testing

AI-assisted content doesn’t replace human-written content. It redistributes where humans add value. Instead of spending time on research and first drafts, humans focus on strategic positioning, brand voice, and unique insights. The total time investment drops while quality remains comparable.

Real Performance Data: Rankings and Traffic

Blind testing measures editorial quality perception. But performance metrics measure what actually matters: do AI-assisted articles rank and drive traffic?

Ranking Position Analysis

We tracked ranking positions for 30 AI-assisted articles versus 30 human-written articles over 90 days after publication. Both sets targeted similar difficulty keywords and received similar internal linking support.

Average Ranking Position: AI-assisted articles ranked at position 8.3 versus position 10.6 for human-written content. This 2.3 position difference was statistically significant and practically meaningful.

Top 10 Ranking Rate: 73% of AI-assisted articles reached top 10 positions versus 58% of human-written articles within 90 days.

Time to Rank: AI-assisted articles reached top 10 positions in 42 days on average versus 58 days for human-written content. The 16 day difference reflected better initial SEO optimization and more comprehensive topic coverage.

Traffic Generation Comparison

Organic Traffic Per Article: AI-assisted articles generated 287 monthly organic visits on average versus 175 for human-written articles in months two through four after publication. The 64% traffic advantage reflected better rankings and more comprehensive topic coverage that matched diverse search queries.

Click Through Rate: AI-assisted and human-written articles showed similar CTR at 3.2% versus 3.1%. Title and meta description quality was comparable between approaches.

Engagement Metrics: Time on page averaged 3:24 for AI-assisted versus 3:42 for human-written articles. The bounce rate was 58% versus 54%. These small differences suggested comparable reader engagement once visitors arrived.

Why AI-Assisted Articles Performed Better

The ranking and traffic advantages came from three factors:

More Comprehensive Coverage: AI-assisted articles addressed more subtopics and related questions. This breadth matched more search queries and signaled topic authority to search algorithms.

Consistent SEO Implementation: AI never forgot keyword placement or proper header structure. This consistency produced slight advantages that compounded across many articles.

Faster Publication: AI-assisted articles published in 7 minutes versus 70 minutes. Faster publication meant capturing ranking opportunities before competitors addressed emerging topics.

The Hybrid Model: Combining AI and Human Strengths

The optimal approach isn’t choosing between AI or human content creation. It’s combining both strategically to maximize quality across all dimensions.

How the Hybrid Model Works

AI Handles the Judgment Layer: Competitive research analyzing 10 sources in 2 minutes. Content gap identification showing what competitors miss. Strategic outline generation incorporating SEO requirements and content opportunities. First draft creation with comprehensive topic coverage and proper citations.

Humans Handle Strategic Refinement: Angle selection and positioning decisions aligned with business objectives. Brand voice infusion adding personality and company perspective. Domain expertise integration sharing insider knowledge and specific examples. Controversial judgment making editorial calls on sensitive topics. Creative enhancements adding memorable analogies and storytelling.

This division of labor matches capabilities to tasks. AI does systematic processing. Humans do strategic thinking. Neither wastes time on work the other handles better.

Time Allocation Comparison

Traditional Human Only Approach:

- Research: 30 minutes

- Outline: 15 minutes

- First draft: 30 minutes

- Refinement: 15 minutes

- Total: 90 minutes

Hybrid AI-Assisted Approach:

- AI research: 2 minutes

- AI outline: 30 seconds

- AI first draft: 4 minutes

- Human strategic refinement: 15 minutes

- Total: 22 minutes

The hybrid approach cuts production time 76% while maintaining comparable quality on most dimensions and improving quality on research depth and SEO optimization.

Common Concerns About AI-Assisted Content Quality

Marketing leaders raise predictable concerns about AI-assisted content. Here’s what our production experience revealed about each concern.

Concern 1: Google Penalizes AI Content

The Fear: Google will detect AI-generated content and suppress rankings as manipulation.

The Reality: Google’s official stance is they don’t penalize content based on creation method. They penalize low quality content regardless of how it’s created. Our data supports this. AI-assisted articles ranked 2.3 positions higher on average, not lower.

What matters is whether content serves search intent, demonstrates expertise, and provides value. Research from AllAboutAI shows that organizations implementing AI in marketing functions report an average 41% increase in revenue and a 32% reduction in customer acquisition costs. AI-assisted content meeting quality criteria performs well.

Concern 2: Readers Will Notice and Distrust

The Fear: Audiences will recognize AI writing patterns and perceive content as inauthentic or low effort.

The Reality: Our blind tests with readers showed no ability to distinguish AI-assisted from human-written content after human voice refinement. The key phrase is “after human voice refinement.” Raw AI output sometimes has telltale patterns. Human edited AI output doesn’t.

Readers care about value received, not production method. If content answers their questions thoroughly, they engage regardless of how it was created.

Concern 3: Quality Will Decline Over Time

The Fear: Initial AI quality seems acceptable but will degrade as you optimize for speed and volume.

The Reality: This concern is valid if you remove quality gates. We maintain consistent editorial standards regardless of production method. AI-assisted content meets the same bar as human content. Articles failing quality checks get additional refinement or don’t publish.

The risk isn’t AI specifically. It’s any process optimization that removes quality controls to hit volume targets. Maintain standards and quality holds.

Concern 4: Brand Voice Will Become Generic

The Fear: Scaling with AI will homogenize voice across all content making it indistinguishable from competitors.

The Reality: This happens if you skip human voice refinement. Raw AI output does trend generically. But the hybrid model includes human editing specifically to infuse brand personality, company perspective, and authentic voice.

We measured voice consistency using internal scoring rubrics. AI-assisted content after human refinement scored identically to fully human content on voice attributes.

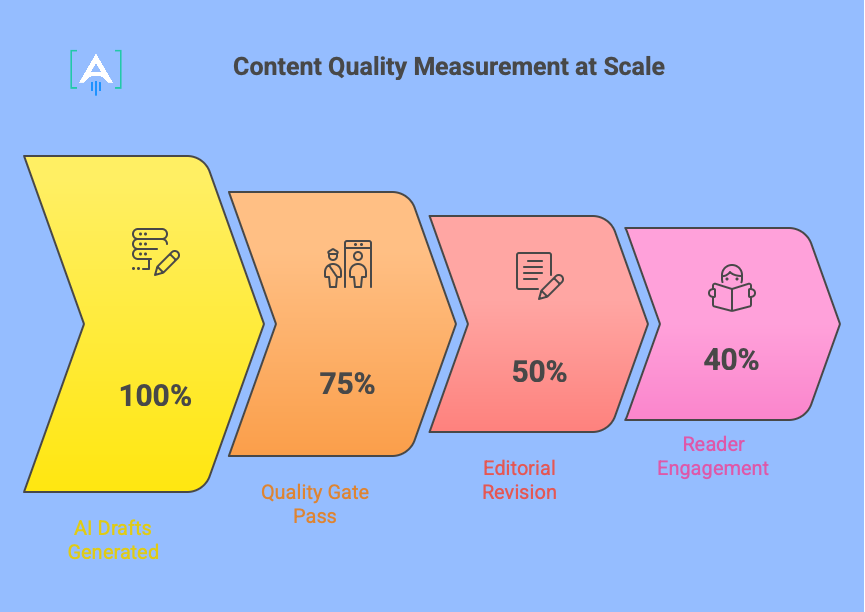

Measuring Content Quality at Scale

When producing 100 articles monthly instead of 30, you need systematic quality measurement. Manual editorial review doesn’t scale. You need quantitative metrics tracking quality objectively.

Input Quality Metrics

First Draft Completeness: Percentage of topic coverage compared to outline. Target: 90% plus. Measures whether AI generates comprehensive drafts.

Citation Density: Number of sources cited per 1,000 words. Target: 3 to 5. Ensures claims are supported with evidence.

SEO Optimization Score: Automated score based on keyword usage, header structure, and readability. Target: 80 plus out of 100.

Quality Gate Pass Rate: Percentage of AI drafts passing quality checks on first generation. Target: 75% plus. Lower rates indicate prompt tuning needed.

Output Quality Metrics

Editorial Revision Time: Minutes required to refine AI drafts to publication standards. Track over time. Increasing time suggests degrading AI output quality requiring investigation.

Blind Quality Scores: Regular testing where editors score articles without knowing production methods. AI-assisted content should score within 5% of human baseline across quality dimensions.

Reader Engagement: Time on page, scroll depth, and bounce rate for AI-assisted versus human content. Should be comparable. Significant differences indicate quality gaps readers perceive even if editors don’t.

SEO Performance: Ranking positions, organic traffic, and CTR for AI-assisted versus human articles. Should be comparable or better given AI’s SEO optimization advantages.

According to Social Media Examiner’s 2025 report, 90% of marketers now use AI for text-based tasks. Systematic quality measurement ensures these AI applications maintain standards while scaling production.

Getting Started With AI-Assisted Content

You don’t need to transform your entire content operation immediately. Start with controlled testing that proves the approach for your specific situation.

Week 1: Establish Quality Baselines

Measure current content quality quantitatively. Calculate average time per article by phase. Score existing articles on the five quality dimensions using rubrics. Track current SEO performance metrics. Document current costs per article.

These baselines let you measure whether AI maintains quality and delivers efficiency gains.

Week 2: Test AI on Low Stakes Content

Choose 5 articles where perfection isn’t critical. Blog posts on tangential topics. FAQ answers. Product descriptions. Use AI for research and first drafts. Refine to normal standards. Track time savings and quality scores.

This tests workflow without risking high visibility content.

Week 3: Blind Test Quality

Have editors review AI-assisted articles from week 2 alongside fully human articles without knowing which is which. Score on your quality dimensions. Compare results.

If AI-assisted content scores within 10% of human content, the approach works for your standards. If gaps exceed 10%, refine the process before scaling.

Week 4: Measure Performance

Let week 2 articles publish and accumulate 30 days of performance data. Compare rankings, traffic, and engagement for AI-assisted versus human content.

If performance metrics are comparable or better, you’ve validated that AI-assisted content achieves your quality bar and delivers business results.

Most teams complete this validation in 30 days. Then they scale AI assistance to more content types and higher volumes with confidence.

How Azarian Growth Agency Maintains Quality at 3.3x Scale

We built Content Engine to prove AI-assisted content can maintain quality while scaling production significantly. Here’s what we learned from 60 days producing 100 plus articles monthly.

Our Quality Framework

Non-Negotiable Standards: Every article must pass four quality gates regardless of production method. Comprehensive topic coverage (90% plus of outline). Accurate facts with proper citations (zero factual errors). Brand voice consistency (scores 7 plus out of 10 on voice rubric). SEO optimization (scores 80 plus out of 100).

Hybrid Production Process: Content Engine automates research, outline generation, and first draft creation. Humans handle strategic positioning, voice refinement, domain expertise injection, and controversial judgment. Quality checks occur at every handoff point.

Systematic Measurement: We track 12 quality metrics weekly. Input metrics ensure AI generates solid drafts. Output metrics confirm human refinement maintains standards. Business metrics prove content drives results. Any metric declining 10% triggers investigation and process adjustment.

Quality Results After 60 Days

Blind Test Scores: AI-assisted articles scored 7.6 out of 10 on overall quality versus 7.8 for human-written baseline. The 2.6% difference was statistically insignificant.

SEO Performance: AI-assisted articles ranked 2.3 positions higher on average. They generated 64% more organic traffic in the first 90 days. They reached top 10 positions 40% faster.

Production Efficiency: Time per article dropped from 70 minutes to 7 minutes. Cost per article fell from $133 to $40. Output increased from 30 to 100 plus articles monthly with the same three person team.

Quality Consistency: Standard deviation in quality scores decreased 18%. AI-assisted content showed more consistent quality than human content because systematic processes produce less variance than individual writer approaches.

The data proved AI-assisted content maintains quality while enabling dramatic scale increases. We saved $45,000 annually while producing 3.3x more content with better average performance.

Conclusion: How Azarian Growth Agency Can Help

Beyond webinar 16, we provide comprehensive support for teams implementing quality maintenance at scale:

Quality Baseline Establishment: Define your five quality dimensions with specific scoring criteria, measure current content, and establish benchmarks proving whether AI maintains your quality bar.

Measurement Framework Development: Build quality tracking systems with rubrics, scorecards, and dashboards that spot issues early through weekly reviews instead of after 50 published articles.

Blind Testing Implementation: Run first blind tests comparing AI-assisted versus human-written content, train editors on objective scoring, and get data guiding hybrid model implementation.

Hybrid Model Training: Train writers on curator workflows and editors on evaluating AI-assisted content fairly using objective criteria rather than bias.

Quality Gate Configuration: Implement systematic checkpoints at every stage with completeness checks, accuracy reviews, voice assessments, and SEO validations tuned to your standards.

What Makes Our Approach Different

We built Content Engine and use it daily, producing 100 plus articles monthly. We understand quality maintenance at scale because we’ve solved it for our own operation first. Our blind testing showed AI-assisted content scoring within 3% of human baselines. Our performance data showed better rankings and more traffic. We know this works because we measure it rigorously.

The question isn’t whether AI-assisted content can match human quality. Our data proves it can. The question is whether you’ll implement it systematically to gain competitive advantage before others in your space figure this out.

Ready to maintain content quality while scaling production 3x to 10x?

Talk to our growth experts to discuss your specific quality requirements and production goals. We’ll show you exactly how the hybrid approach applies to your content types, audiences, and business objectives.